What is SEO? [Search Engine Optimization]

Introduction To SEO

When you type something in the search engine you get a results page that lists webpages possibly containing what you searched for. You and most users tend to look up the pages that appear on the top of these results as they are thought to be more accurate. The real reason however lies in the ingenuity of a marketing technique we call Search Engine Optimization (SEO).

SEO is the key that assists your webpage up the ladder of rankings better than other sites. In short it directs a lot of traffic to your website when anything related to your content is searched for.

This article specifically dedicated to SEO technique will definitely answer your queries regarding its function and how it works for search engines used extensively.

How it works?

The obvious distinction here needs to be made more obvious that being: the one searching is human whereas the one doing the search is not near a human. Both have their own ways of looking for content. Search engines are purely text-dependent which translates into the fact of not judging a webpage by its better looks or soothing sounds. No hard feelings, in fact no feelings at all. As their ability allows, search engines scan through the web and look at websites through some of their text mainly. When they have an idea of the content, they either pick the page or leave it if not suited. This is not the only feat they perform to produce results but a list of activities likecrawling, indexing, processing, calculating relevancy, and retrieving are performed to get the final result listing.

[adsense]

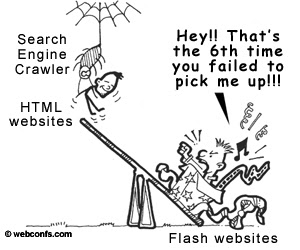

Crawling is the act of looking at the web content or seeing the entire content on the search made. Different engines have different names for the software that performs this task like Crawler, Spider or for Google a Googlebot. These bots go from page to page and index all the data they have gone through. Since the numbers of pages on Web are in an enormous amount of more than 20 billion, these bots might not be able to search a webpage everyday to see if the site has been updated with more content. This may leave a site unsearched for even a month or so.

Crawling is the act of looking at the web content or seeing the entire content on the search made. Different engines have different names for the software that performs this task like Crawler, Spider or for Google a Googlebot. These bots go from page to page and index all the data they have gone through. Since the numbers of pages on Web are in an enormous amount of more than 20 billion, these bots might not be able to search a webpage everyday to see if the site has been updated with more content. This may leave a site unsearched for even a month or so.To avoid such situations you first need to find out what these bots (Spiders) can view on your website. As they are not humans so they won’t see flashy stuff like images, movies, JavaScript etc. But if you have loaded your webpage with such things then they might be entirely invisible to these spiders and not be indexed or processed further. To check you might as well run theSpider Simulator.

The next step after crawling is indexing in which the page is stored in a huge database after it is assigned to keywords that best indicate the subject of the content as per the search engine. From this record the particular page can be picked later against the keywords it is assigned. Imagine the time and energy a human will have to spend to scan all that amount of data – A matter of minutes for a search engine. Being merely software it might not hit the right page but optimizing by you can help both the engine to be accurate and you: You can achieve higher ranking.

When a user types in for a search, the search engine immediately initiates the processing. A comparison of the search text with the indexed data is done. Resultantly, millions of pages contain that search string but it is further shortlisted as the engine then calculates the relevancy of every page it processes with the search made.

You might need to change your track if you think all search engines use the same algorithm to compute the relevancy of the content. In fact, there are many with different weighting for features like links, metatags, keywords etc. That is the reason why you get different results on different engines for same search words. Major engines like Yahoo!, Google, Bing also have a tradition of regularly changing these algorithms and in the race of getting higher rankings you must keep up to them by keeping up to your SEO campaign.

Retrieving comes at the end. As the name suggests, it is merely a listing of the results in the browser you are using. The sequence from top to bottom is usually from higher to lower relevancy.

Different Engines do differ!

The underlying phenomenon is the same. There are just little differences in the principles that end up as major variations in the relevancy of a webpage. The level of importance given to each factor varies. Buzz from the SEO community has it that Bing algorithms were made as exact opposites of Google. You can imagine the dissimilarity in results. If your target is more than one search engine then you are in for a very cautious optimization.

Varying importance to factors means something like for Bing on-page keywords are the most important whereas Google

has its eyes for links. Another example is the age of the domain, for Google the older the better concept works but Yahoo! has no such tradition. This also means your site might take more time to be finally suitable for Google than Yahoo! Keep a look out for these little details and you will be on your way up the rankings.

No comments:

Post a Comment